reAct pt. 2

Testing the benefits of Specific Models

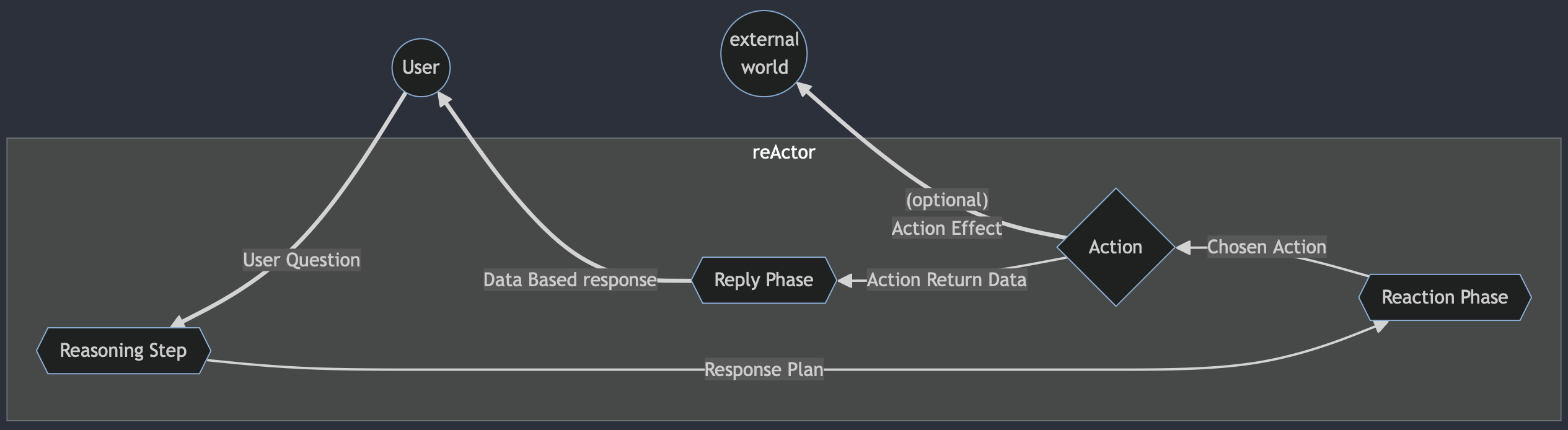

As outlined in the first reAct blog post, our implementation of reAct allows us to utilize specialized LLMs to execute different phases of the reAct cycle. This allows for much more precision and control over the responses coming in each phase.

We tried a few larger conversational models, but found that even very capable models like GPT-4 would often simply try to have a conversation, even after the introduction of the reasoning phase and a lot of prompt engineering. Instead, we landed on using Airoboros 7b 3.1.2, as quantized by TheBloke, as the LLM that would perform both the reasoning and reaction phases. Airoboros was able to follow our instuctions for these phases with great precision, despite its relatively diminutive size.

Benifits of Airoboros:

- Airoboros as a model is trained for obedience, to follow instuctions. With this specialization, it is much more capable of following our Reasoning and reaction prompts.

- Conversational models, even one as powerful as GPT-4 sometimes fails to do as it’s told, particularly if there’s a conversation going on, since it’s not sure if it should respond to the conversation or the prompt. We found this issue quite resistant to prompt engineering.

- Airoboros is a 7-billion parameter model, much smaller than

GPT-3.5’s 175-billion parameters, andGPT-4’s rumored 1.5 trillion parameters.- 7b is so small that we can run it in just 5GB of (video) memory in our office’s local dev-server, or deploy very inexpensively to a small cloud instance.

- This smaller size also significantly reduces the time the model needs to complete a single inference, as we will elaborate on shortly.

As we have improved the phases of the reAct cycle to generate better, more useful, more structured contextual information, we figured that we could also probably utilize a much smaller model for the final reply phase, too. In fact, we found that 13 billion parameter, conversational models were capable of creating useful responses to the user’s message, when given the context of the previous reAct phases. We haven’t settled on a specific model for this task yet, but now that we’re using local models for all of the reAct phases that utilize an LLM, we can start taking measurements of how long each phase takes to complete, and how much money we can save by not utilizing a full-service LLM API like OpenAI’s GPT models:

| Reasoning model | Reaction model | Reply model | Total Time (seconds) | Total Cost (USD) |

|---|---|---|---|---|

| gpt-4-turbo | gpt-4-turbo | gpt-4-turbo | 49 | 0.38540 |

| gpt-4 | gpt-4 | gpt-4 | 65 | 1.06740 |

| gpt-3.5-turbo | gpt-3.5-turbo | gpt-4-turbo | 43 | 0.20014 |

| gpt-3.5-turbo | gpt-3.5-turbo | gpt-3.5-turbo | 22 | 0.03558 |

| Airoboros-M-7B-3.1.2 | Airoboros-M-7B-3.1.2 | gpt-4-turbo | 21 | 0.18168 |

| Airoboros-M-7B-3.1.2 | Airoboros-M-7B-3.1.2 | gpt-3.5-turbo | 17 | 0.01712 |

| Airoboros-M-7B-3.1.2 | Airoboros-M-7B-3.1.2 | LlongOrca-13B-16K | 7 | 0.00016 |

(Cost is calculated using tokens in/out according to OpenAI’s pricing, or assumed to be 0.00000005 USD per 1000 tokens for local models, based on local electricity pricing, but excluding hardware acquisition costs.)

The numbers for OpenAI’s models are inflated by our relatively untrimmed prompting right now, but is still enormous, compared against the cost of electricity to run local models. Even factoring in the cost of the hardware, it only takes a few thousand reAct cycles to make up the difference.

Action Arguments

Potentially the biggest change that we’ve made since the last reAct blog was adding the ability for the reaction phase to be able to generate arguments for action calls.

This solved a large problem when trying to integrate a Wikipedia and Google action. Previously we were just passing along the results from the reaction phase stright into the action execution, for example just passing the user question straight into a google search. This wouldn’t work very for Google, since the user’s question wouldn’t be well formed as a Google search, and would frequently completely break Wikipedia, since the user question would be too long for the wikipedia API to handle.

By adding the ability to include arguments we are no longer limited to using certain actions and there is no limit to what kinds of algorithms we can use, and we can interpret and refine the arguments/queries that get passed into an action. This change enables the uitization of all kinds of resources in the action phase, from wikipedia, google, Wolfram, stock market data, database lookups, and even other generative-AI API’s, such as Midjourney.

Here is the single-shot example that we use in the reaction prompt to generate JSON objects that describe what action(s) the agent will perform in the action phase.

[

{

"ACTION": "ACTION Name",

"ARGUMENTS":

[

"ACTION argument"

],

"EXPLANATION": "ACTION Explanation"

}

]ReAct (chat) logging

Another major change we made was to maintain a “chatlog” (message list) within the reAct cycle, using the chat completion LLM interfaces rather than the plain text completion.

As a quick reminder, here’s the structure of the reActor:

real user message: how did the CU buffs do in their last college football game?

After this point, both “user” and “system” in this example chatlog is the reActor itself, structuring the prompting and context that the LLMs are working off of. This whole process is happening within the reActor, and the actual user who asked the question about the buffs game will only see the final, reply phase’s response.

system:You are a part of the OoriChat Ambassador, a useful assistant who will respond to user’s messages the absolute best of their ability.You are the decision making thought process for the OoriChat Ambassador, where you go through a cycle of REASONING, REACTION, ACTION, and REPLY in order to make useful and helpful responses to a user.

user:You are the decision making thought process for the OoriChat Ambassador, where you go through a cycle of REASONING, REACTION, ACTION, and REPLY in order to make useful and helpful responses to a user.This is the first phase, REASONING, which you are currently performing; you are going to use reason and logic to determine the steps you need to take to respond to the user’s most recent message.

As the thought process of the OoriChat Ambassador, explain your REASONING (and only your REASONING, not any other phases of the cycle) on what the Ambassador needs to do, who will then take ACTION based on your REASONING.

Here is the user’s message: “How did the CU buffs do in their last college football game?”

assistant:I should use a search engine to find the most recent score from a Colorado University football game.

user:You are the decision making thought process for the OoriChat Ambassador, where you go through a cycle of REASONING, REACTION, ACTION, and REPLY in order to make useful and helpful responses to a user.This is now the REACTION phase, where, based on the thoughts provided by the REASONING phase, you decide on what ACTION(s) to take. Only take more than one ACTION if it is absolutely nessisary.

Be aware that some ACTIONs may require you to respond with extra arguments such as a search subject.

Here is the user’s message: “How did the CU buffs do in their last college football game?”

Here is a list of your available ACTIONs:

- Descripton: Use the “Google” ACTION to find information you don’t have. This tool uses the Google Search engine to find information on the internet.

- ARGUMENT: Respond with a Google search query. Example: “current president of the United States” or “weather in Dubai”.

- Stocks

- Description: Use the “Stocks” ACTION to get information about a any time a stock (or its price) is mentioned.

- ARGUMENTs: Respond with the ticker symbol for the stocks you need the information of. Example: “IBM” for IBM, “T” for AT&T, or “BALL” for Ball Aerospace Corp.

- Oori

- Description: Use the “Oori” ACTION to get information about oori (aka Oori Data), the company you represent.

- Wikipedia

- Description: Search Wikipedia for encyclopedic information. This tool uses the Wikipedia API to find information on Wikipedia. Wikipedia does not usually contain current information; it is an encyclopedia. Use this XOR Google, not both.

- ARGUMENT: Respond with the wikipedia page name.

- Basic

- ACTION Description: Use the “Basic” ACTION only when no other tools are needed.

You will construct a JSON object that describes the ACTION(s) you will take with the following format:

[ { "ACTION": "ACTION Name", "ARGUMENTS": [ "ACTION argument" ], "EXPLAINATION": "ACTION Explaination" } ]You will now construct a JSON object as you were just shown; a list of dictionaries which have an ACTION, a list of ARGUMENTS(s), and an EXPLAINATION. Do not generate output that isn’t in properly formatted JSON.

assistant:[ { “ACTION”: “Google”, “ARGUMENTS”: [ “Latest Colorado University football score” ], “EXPLAINATION”: “I chose the Google action because I don’t have the information I need to respond to the user’s message.” } ]

The Google action then fires, searching for “Latest Colorado University football score”.

user:You are the decision making thought process, where you go through a cycle of REASONING, REACTION, ACTION, and REPLY in order to make useful and helpful responses to a user.This is the final phase, REPLY, where you will use the ACTION that you decided upon in the previous phase to respond to the user.

Return(s) from the ACTION(s): {search results from Google}

REPLY to the following user message utilizing any combination of your own knowledge, the ACTION’s return, and the chat history: “How did the CU buffs do in their last college football game?”

assistant:The CU Buffs lost their last game 16-28 to the UCLA Bruins.

As mentioned above, only this final response is sent to the user.

We have even experimented with appending the reAct log to the end of the chatlog up to that point, allowing for the LLMs to grab context from the chat history, although this is fragile and requires futher investigation. The other solution we have for chat history is to have an ACTION that will grab the chat history from the database and perform a semantic search on it, allowing the agent to have a theoretically “infinite” memory. We expect to land on a hybrid of both solutions, so stay tuned for future blog posts.

Our main driver for the approach described in this article is avoiding problems where the LLM loses focus and context of the reAct cycle. Chatlogs are structures to maintain a conversation’s history, and we are already formulating the reAct cycle as a conversation between the agent’s thought process and the reActor anyway.

As we’ve been drafting this article, work has continued, and we’ll soon have a part 3 on this topic, including a novel approach for interactively applying AI actions to human UX (“chattable settings”), creating windows into the reActor that the user can observe, and even futher improvements to the prompting structure of the reActor.

About the authors

Aidan is a full-stack and AI engineer at Oori contributing to the reAct engine project. Just before starting at Oori in the summer of 2023, Aidan got his undergraduate degree in computer science from the University of Colorado.

aidan@oori.dev

Osi is a backend software and AI engineer, working on the infrastructure behind Oori’s projects and products. Before helping found Oori, they studied mechanical engineering at the University of Colorado.

osi@oori.dev